Happy Holidays! 🎄🎅🎁

We are finally at the end of 2020 and that alone is an achievement.

Personally, I am cautiously optimistic about the future outlook as vaccines are distributed around the world, however, I wouldn't count on a quick "back-to-normal" in 2021 yet.

Overall, the last month of 2020 was a disastrous disappointment for my website, primarily due to Google's last-minute surprise, the December 2020 Core Update.

This algorithm update was reported to be both "broad" and "big" - impacting many sectors and producing huge gains/losses from 10% to over 100% (source: Search Engine Journal).

In this month's income report, I'll discuss the impact of the December 2020 Core Update, my suspicions & theories on what caused it, and the steps I've taken or will be taking in the next few months to fix these problems.

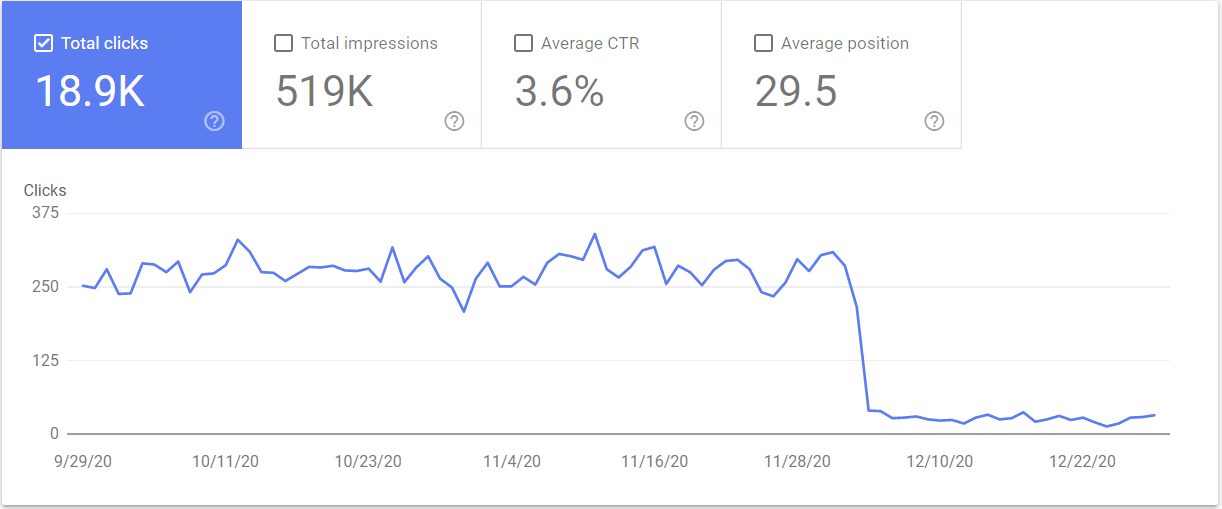

Around December 4, 2020, Google released a broad core algorithm update.

Here's how it went down:

Yikes!! 😥😥😥

This time, the damage was even worse than the September 2019 Core Update (-40% traffic).

Clearly, something is not working...

The first possibility is that I have one or more technical issues on my website.

Technical issues include the page speed, server status, robots.txt, redirections (301), etc.

In general, it's the infrastructure & software configuration of your website (such as plugins).

A slow website may contribute to the overall problem of low traffic or low rankings.

However, I don't think the algorithmic penalty was triggered by a technical issue like page speed or plugin configuration. That's because they're usually smaller factors that don't, on their own, result in a massive decrease in traffic.

But just to be safe, I've taken steps to eliminate any "technical difficulties":

1a. Cloudways

I decided to migrate my website to Cloudways (managed hosting) ahead of schedule.

As I mentioned in last month's income report, I've noticed 5xx server level errors with SiteGround and Google Search Console even sent me a warning about it once!

(strangely, I never had 5xx errors at Bluehost, which is owned by EIG - best known for consolidating hosting companies and turning them into garbage 🤔).

Anyways, it was definitely a good idea to switch and it wasn't even that expensive! ($10/mo with no contract at Cloudways vs. $5.95/mo with 36-month contract at SiteGround)

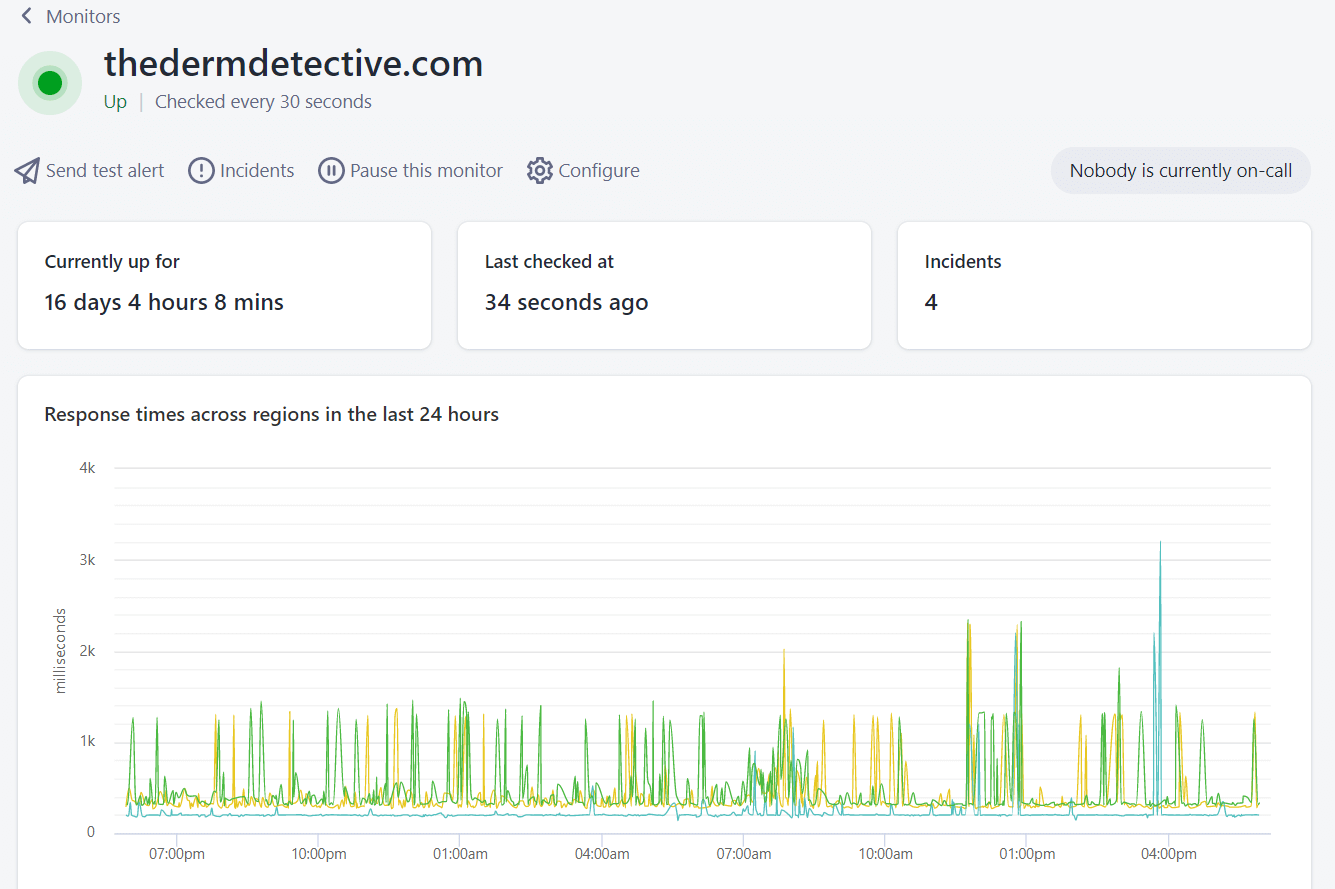

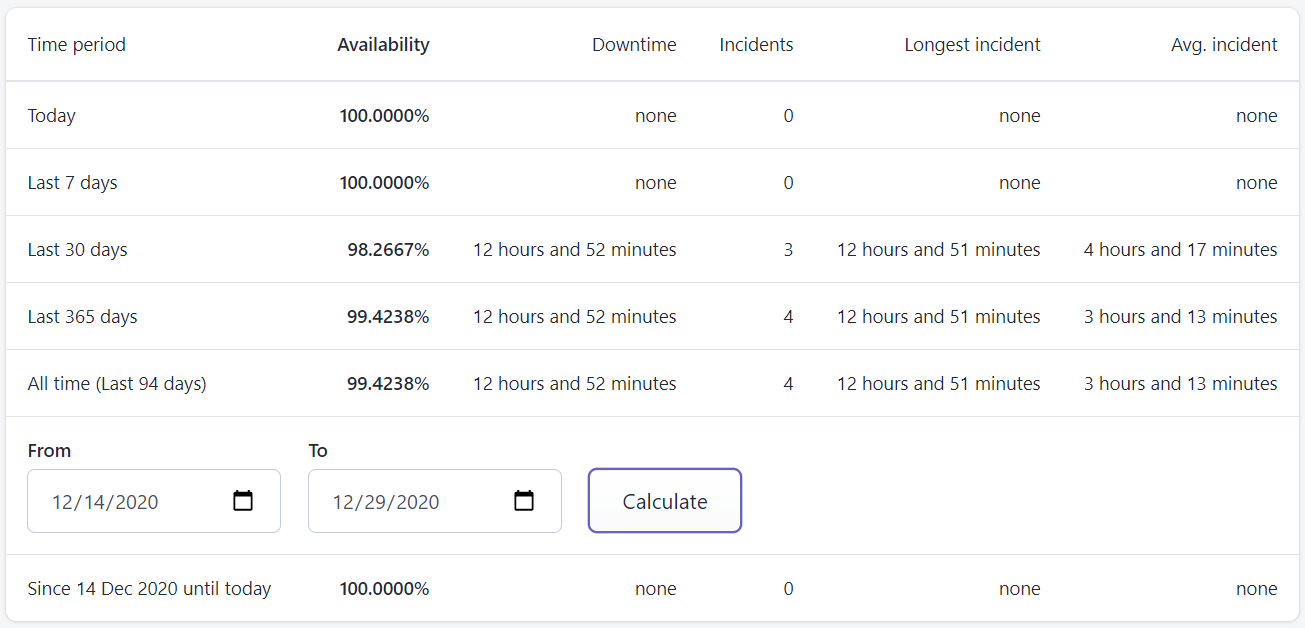

While my page speed has not changed dramatically, the overall uptime % has been better (no downtime at all yet - I use Better Uptime to monitor my website every 30 seconds).

In addition, the Wordpress dashboard feels faster and more responsive which reduces the time that I spend writing and editing posts (well worth the money!).

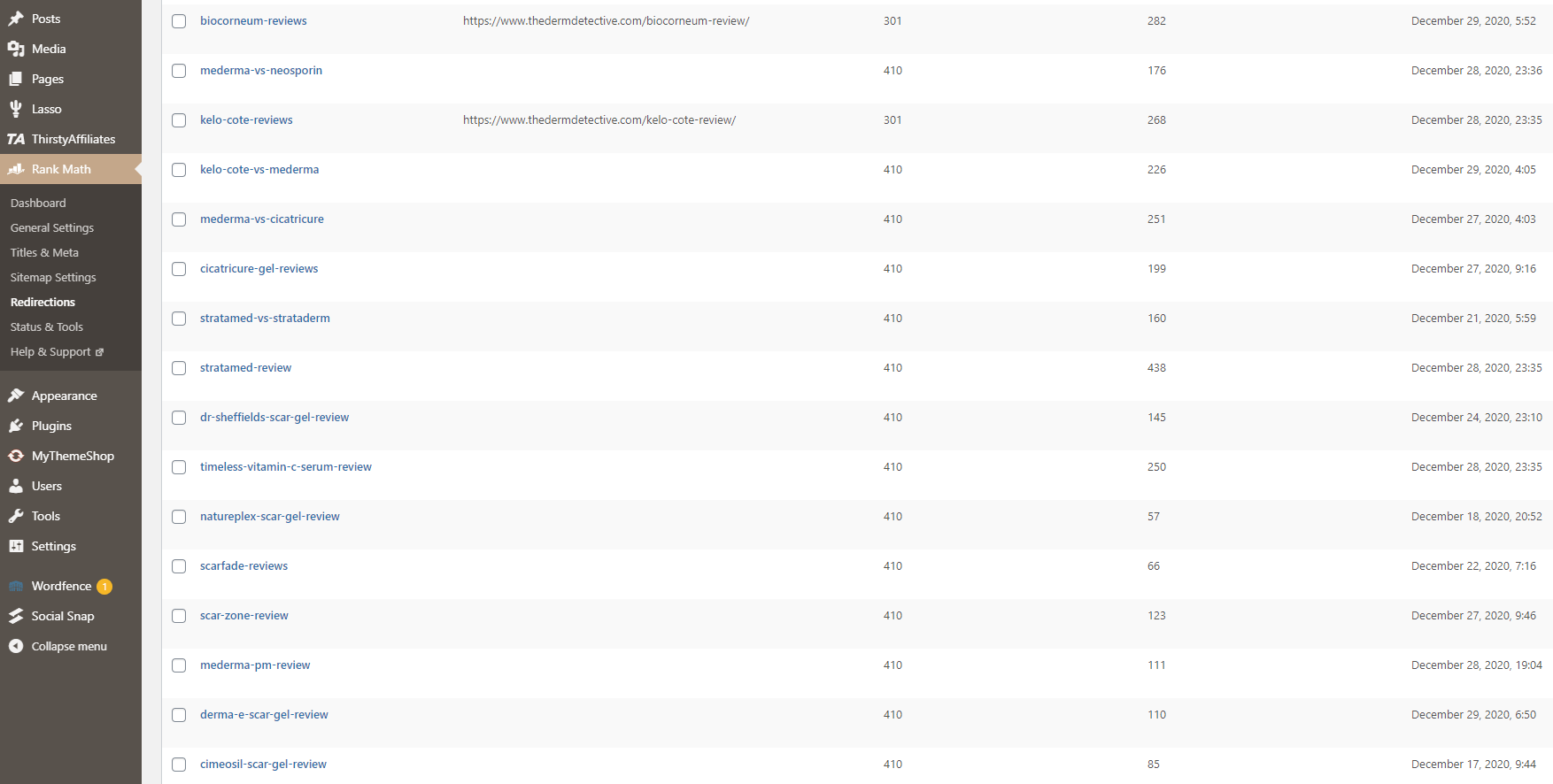

1b. Redirections (301 to 410)

During 2020, I made a few changes in content strategy.

After the Amazon Associates commission rate fiasco back in April, I decided to remove the majority of my "vs" and "review" posts in the scar treatment category, because:

When you remove a post, it's good practice to leave behind instructions to tell search engines why it was deleted and if applicable, what page they should visit instead. It's like leaving your new address at your old place so you can receive any mail that you didn't change the address for.

The online equivalent is 3xx redirections (301, 302, 307) or 4xx client errors (404, 410, 451).

You can check out the full list of HTTP status codes on Wikipedia.

When I deleted my posts, I put up a 301 redirect from the deleted post to a relevant post, to preserve any traffic that I might get and any links pointing to that post.

Now, I'm thinking that maybe I overdid the number of 301 redirect's and Google is either getting confused or perhaps even penalizing my website (I doubt it, but you never know).

Given that these 301's do not provide significant value in terms of traffic or links anymore, I've decided to convert all of them into 410's (page intentionally removed).

The 410 status code tells Google that your page is gone forever which discourages Googlebot from ever returning to recrawl the URL.

It essentially erases the URL from Google's index and is a cleaner solution than the 301 which says the page has been permanently moved to another URL.

The second possibility is that I have one or more content issues on my website.

Normally, I don't think "bad content" per se could ever trigger a site-wide downgrade, unless it's really overdone and all your content is "spun" (created using AI or other automated methods), plagiarized, or extremely "thin" (low word count).

If your content is "bad" (according to Google), then you simply won't rank well. 🤣

However, I've had a few posts ranking really well (that maintained their rankings) for many months before this recent update, so I don't think content was the main problem.

But to be safe, I've taken measures to improve the overall quality of my content portfolio, not just individual posts.

2a. "Thin" Content

The first step was to remove any "thin" content (typically 500 words or less).

While I never intended to create "thin" content, I did have a number of review posts that were pulled out of my best articles - my original thinking was that I could start the journey towards ranking for the review keywords without any additional effort.

I monitored my progress using a rank tracker and upgraded the article in the future when it got closer to page 1. For the most part, these posts ranked pretty well on their own, even though they were technically "cut and paste" duplicated content.

(however, a scenario like this doesn't trigger Google's "duplicate content" penalty. You'll know because your post will be hidden from search results by the duplicate content filter)

Anyways, I decided to prune this type of content from my portfolio and serve a 410 code instead. I've learned that "review" keywords are usually not worth the investment either, because:

Before removing each post, I checked if it received significant clicks or impressions, the estimated keyword volume, and the competitors on page 1, before making a final decision.

2b. Content Simplification (Lasso)

The second step was to reconsider my approach to content creation.

While I'm personally in favor of long-form content (such as this blog post), I recognize that 90% of users are looking for short-form content like lists, infographics, and bullet points, the types of content that you can consume very quickly or skim through.

Unfortunately, it's a long-term trend as people develop shorter and shorter attention spans.

(as we can observe with the popularity of TikTok, for example).

Anyways, I've decided to use a different content layout for my "best" posts. My previous template was very good from a conversion perspective, but it required a lot of manual formatting.

This month, I stumbled across Lasso which inserts well-designed product boxes (called "displays") into my content. Here's an example:

I really like the design - it's very clean & simple which should be good for conversions - and it saves me a lot of time on manual formatting and link insertions.

However, I still have to manually insert elements like the product title, product image, banner text (in the ribbon), product description, and affiliate link. For Amazon products, some of these elements are pulled automatically using the API (like the current price).

Lasso is a bit expensive, though, at $19/mo ($190/yr), but after speaking to the founder via email, I'm pretty bullish on the product and their roadmap of future features (like comparison tables).

I also stopped using Geni.us which saves me $9/mo - so Lasso is like $10/mo net.

It bridges the gap between Amazon Affiliate for Wordpress (AAWP) and ThirstyAffiliates (TA), both of which I've used in the past.

AAWP produces amazing comparison tables and product boxes but only supports Amazon.

TA supports non-Amazon links but doesn't produce any type of product tables.

With the Lasso displays, I see opportunities to recommend products within non-commercial content like tutorials and guides as well.

When you're explaining how to solve a problem, you might recommend a product that you like, and instead of using a plain text link, you could drop a stylish Lasso display.

2c. Content Optimization (Frase)

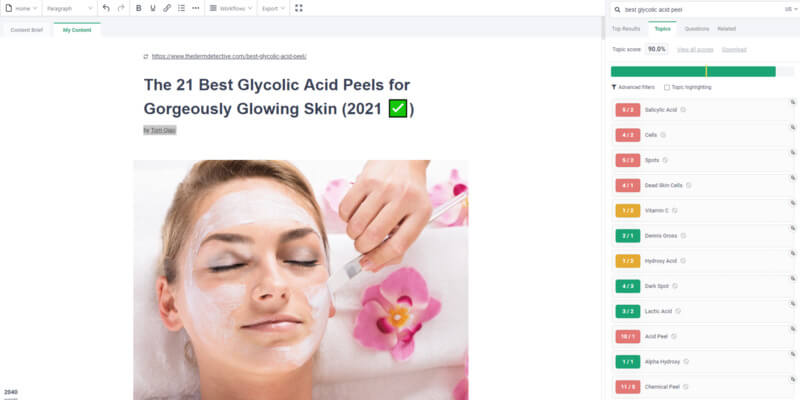

Last month, I bought a lifetime deal for Frase.io, a content research and on-page SEO tool.

I'm currently using it to "polish" my completed posts by adding keywords or phrases that my competitors are using but I'm not.

Frase scrapes the top 20 results for a target keyword, runs it through their proprietary algorithm, and produces a report of topics along with a topic score.

While Frase has many features, I mainly look at the topic score to make sure I've covered most of the relevant topics.

Frase helps me fill in my "blindspots" as I tend to overuse certain words or phrases and underuse other ones.

It's a nice middlepoint between a sophisticated on-page tool like Surfer or POP, which use correlational or single variable analysis to provide recommendations, and just using a template.

TBD on whether Frase will actually help with rankings but the overall approach is very reasonable (don't miss anything your competitors are doing).

Finally, the last, and most likely possibility, is that I have one or more link issues.

3a. Domain Authority

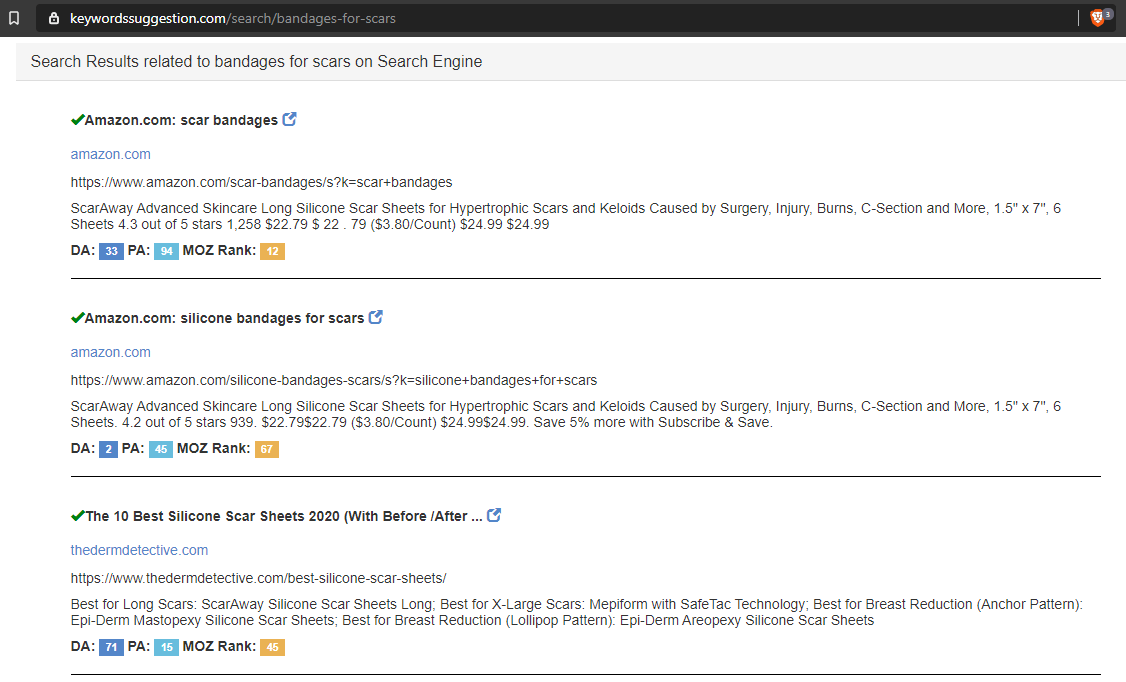

After the December 2020 Core Update, my first train of thought was I needed more domain authority (i.e. more & better backlinks) to make up the gap with my competitors.

I noticed during a manual review of the SERPs (of keywords I lost) that there was no major movement in rankings for the competition, especially if they were massive websites (like Byrdie, Bustle, Refinery29, online magazines, specialty retailers, etc.).

Side Note: I use a rank tracker called SERPWatch that automatically saves a ranking history of the competition for a target keyword. 👍

Based on my observations, my working theory is that the higher your domain authority, the more difficult it is to drop off the SERPs during an update - it's like having a giant anchor weigh you down against the "storm" of Google Updates.

(however, I have seen in Ahrefs cases of massive traffic declines for large DR 90 websites, so they're definitely not 100% immune).

In 2020, I built links exclusively through paid guest posts at SEOButler and Authority Builders.

In 2021, I plan to expand into organic outreach and relationship building to diversify my link profile (more on that in just a bit).

I also didn't have a "link plan" in 2020 (i.e. targets, distribution, and schedule).

Link building was ad hoc and based on which posts I wanted to boost.

That's why I want to execute a more comprehensive link building strategy that focuses on building overall domain authority, rather than individual pages, along with a consistent schedule.

I'm planning to use a mix of agency links (only high-quality guests posts), custom email outreach with niche-relevant websites (good DR, good traffic, history of increasing traffic, low spam scores), and hiring a dedicated link building agency (but only if they do real outreach).

Back in H1 2019, pre-Chiang Mai SEO Conference, I ran "shotgun skyscraper" outreach campaigns that ultimately did not result in many quality links (and was extremely time inefficient).

However, this time I'm taking a more nuanced approach to "white hat" link building - I'm focused on the quality metrics of the domain and niche relevancy, not whether I have to pay them or not.

The fact is, you can get links for "free" and they can still turn out to be duds (or worse, even count against you in Google's algorithm).

Most webmasters / bloggers today won't even reply to your "guest post" outreach email and the ones that do almost always want to be paid.

Finally, it's worth noting that the best links, the ones that are 100% natural and editorially placed, only come when actual writers & editors decide (or sometimes bribed or influenced) to include you in their content, and that only happens if you have something authoritative, creative, data-driven, or unique.

So the last piece of my link puzzle is to create higher quality info content, find effective channels to share them with niche-relevant websites and influencers, and if possible, rank them so that there's some chance of getting an organic backlink from someone (because buying guest posts will almost never get you a quality link from a top tier publication).

3b. Backlink Audit & Disavow File

My second train of thought didn't arrive until late December when I received an email from Authority Builders about their new Link Audit service.

This service is performed by Rick Lomas from Link Detective (great name, btw! 🤗).

The link audit includes a full review of your website's backlinks (using data from Ahrefs, SEMRush, and LinkResearchTools), a disavow file - this tells Google which links you want them to "ignore", and a explainer video.

I definitely felt I could benefit from a link audit, given my current situation, but I really didn't know much about doing a link disavow.

After doing a bit of research (mainly reading the guide by Ahrefs here), I learned that disavows are usually reserved as a last-resort as doing them can actually harm your website since you might be removing links that were passing decent PageRank to you.

However, the clearest indication for a disavow is either a manual penalty (Google basically blocks your website from search results) or a massive algo hit (which is the category I'm in).

Now that I've decided to do a link audit & disavow, the only question was whether to pay for a professional link audit ($297) or do-it-myself.

The fee wasn't too bad, compared to the money I've been throwing at paid guest posts, but I ultimately decided to DIY and learn more about the link audit process.

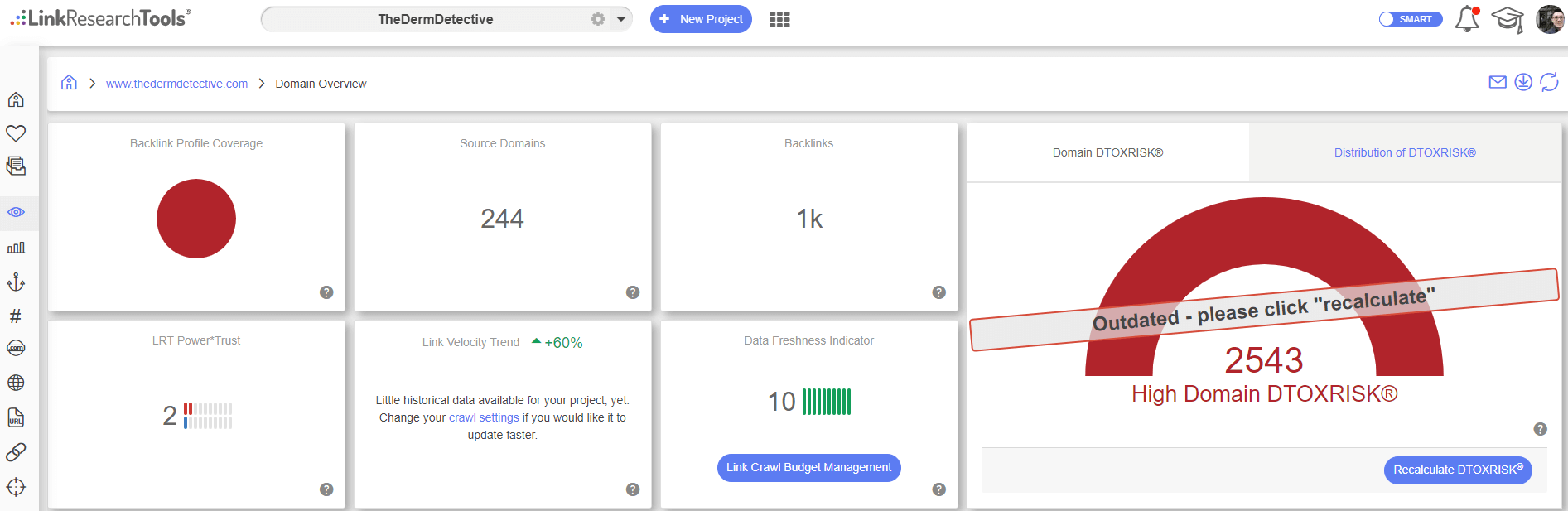

I read through Rick Lomas' FAQ page and it seems the main data source for his report comes from LinkResearchTools (LRT), a specialty SEO tool for link analysis.

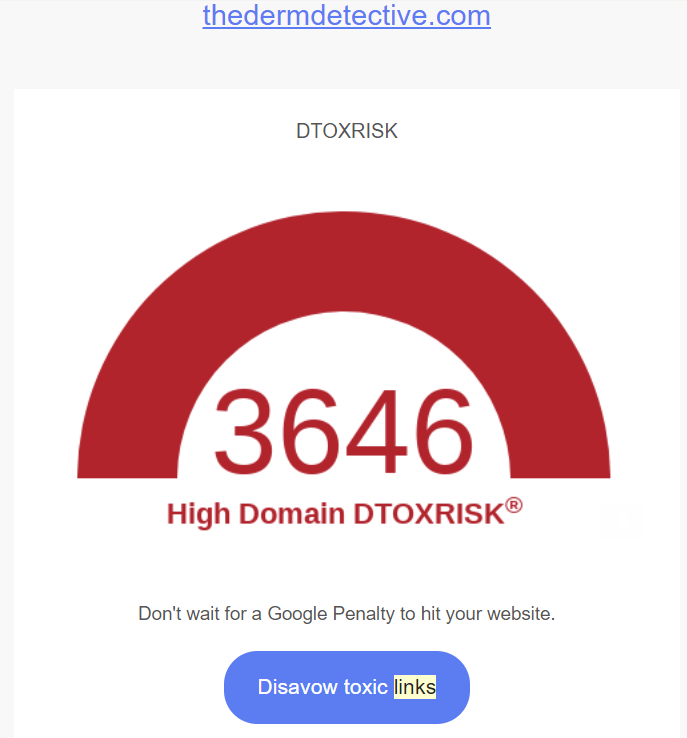

Fortunately, they have a $7 trial for 7 days so I signed up and ran their Link Detox Smart and this is what happened:

The tool calculated a huge red DTOXRISK score (their proprietary grading system for spamminess).

Actually, my original DTOXRISK score was even higher, like 3600+ (LRT says that anything over 1,000 "very likely causes a link penalty 😑 source: LRT).

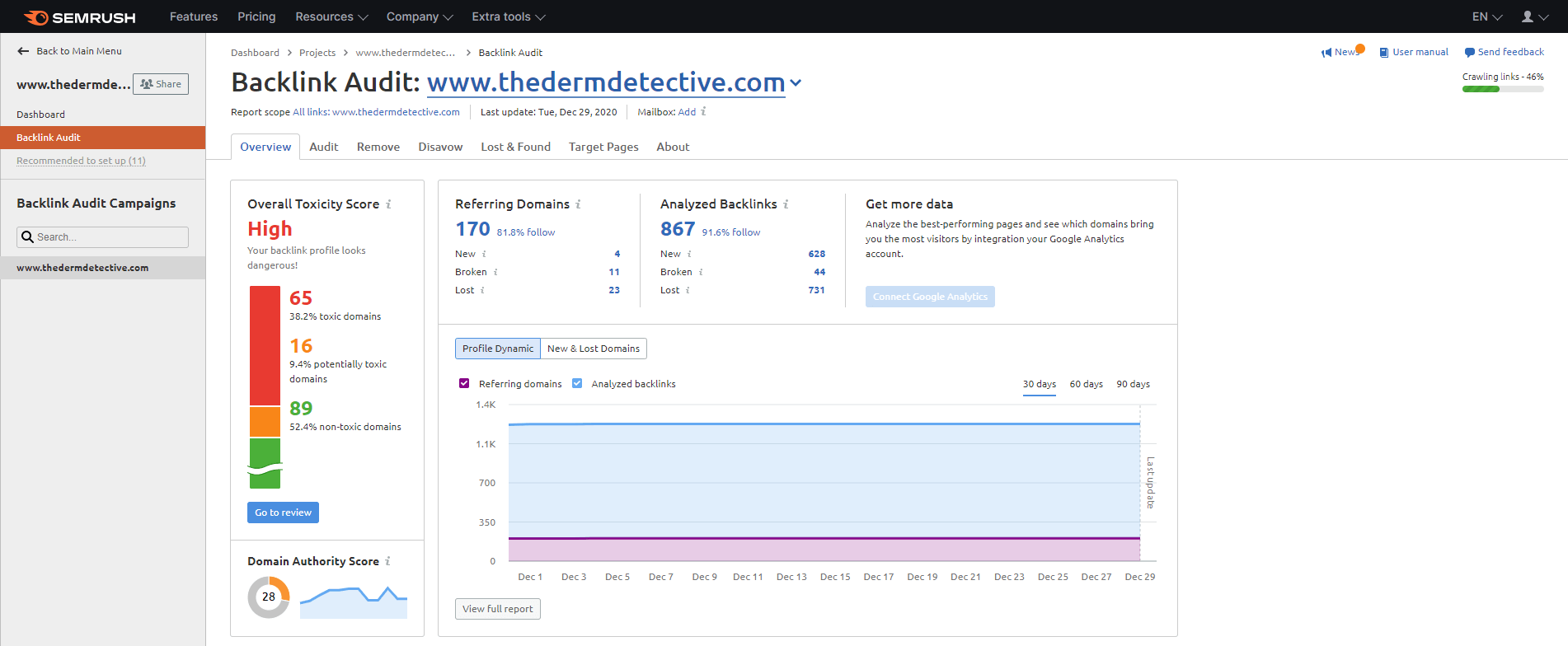

By the way, I also checked SEMRush's domain toxic score and it's pretty high too:

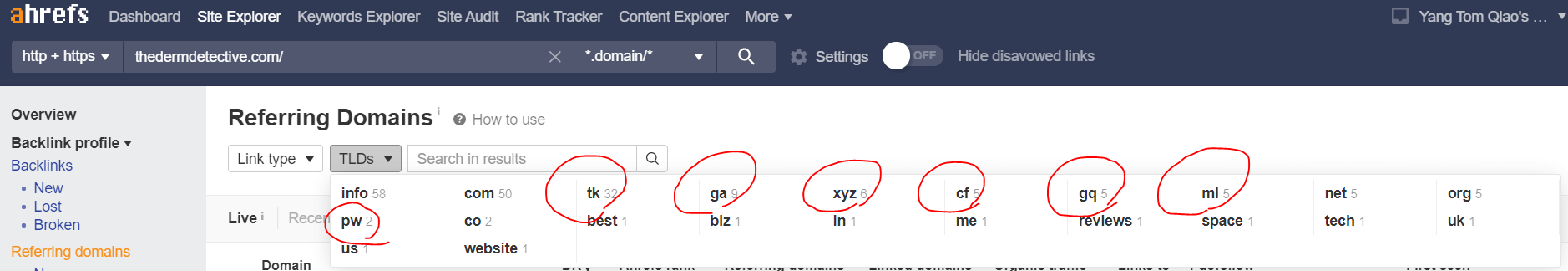

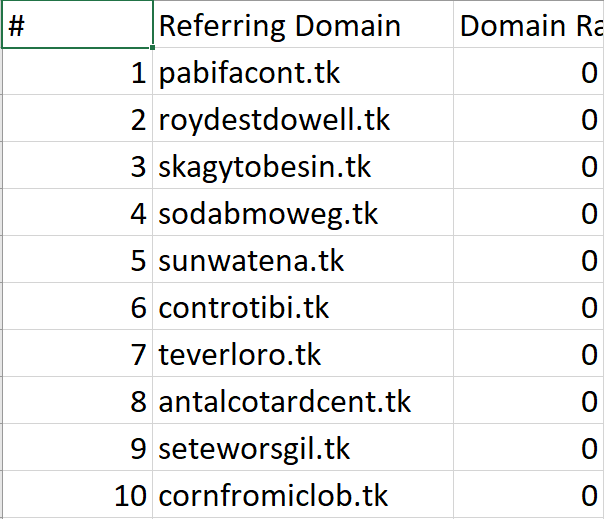

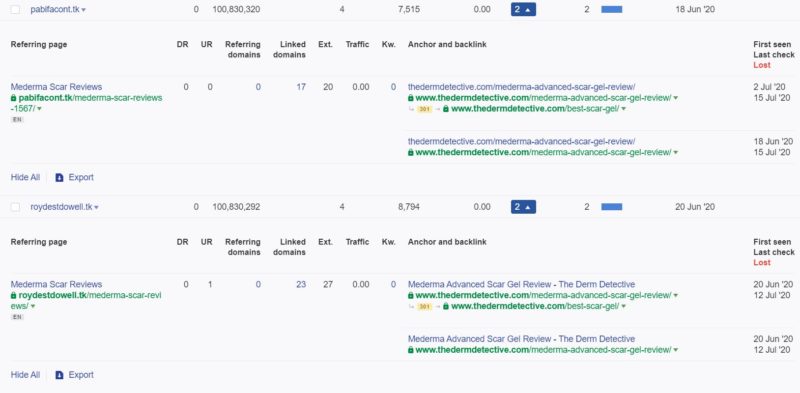

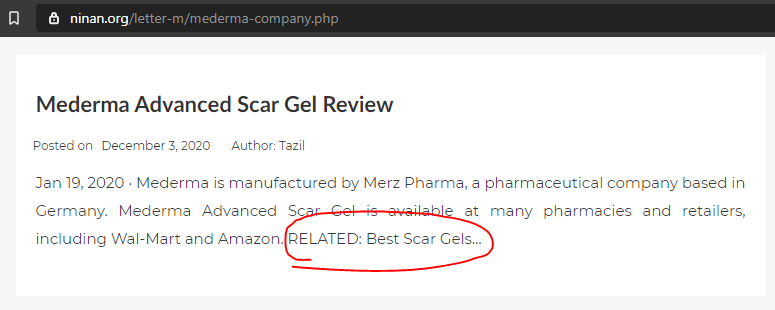

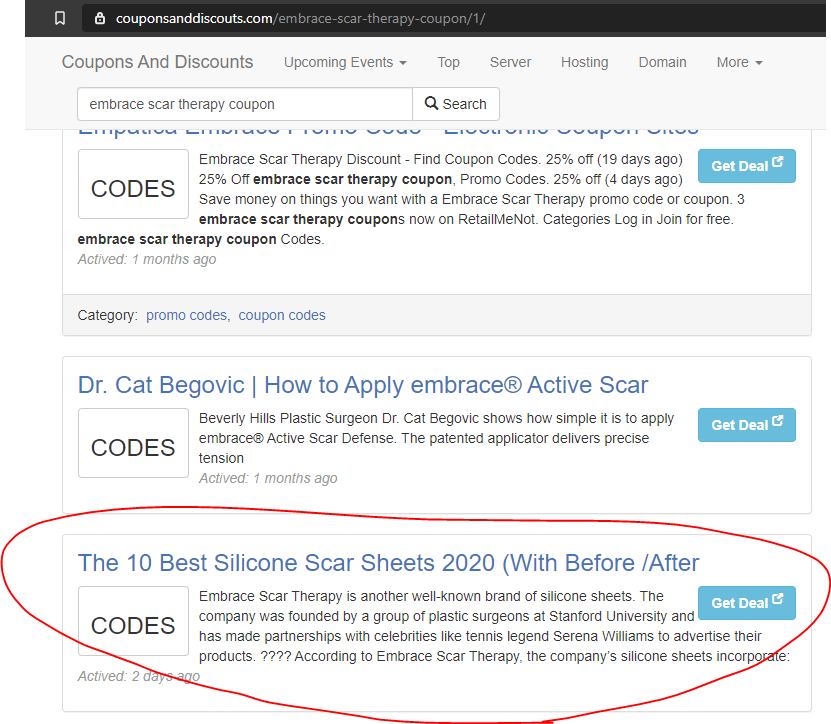

Okay, so I was a bit surprised at how "toxic" my link profile was, but after reviewing the "suspicious links" (btw, LRT organizes them very nicely based on DTOX score, whether the domains returned a 400 or 500 error, whether the domain has a PBN footprint, and a ton of other metrics), I discovered that the culprits were spammy websites that were scraping my content or images, often providing a "money" anchor text.

In fact, there were lots of similarities across the spammy websites, including:

Now, I've also been trying to understand why I got these backlinks in the first place (there was a mix of dofollow and nofollow). For the most part, the links were placed on pages that were clearly scrapped using some kind of software program.

Here's how I would categorize the spammy websites:

In any case, I manually reviewed each domain and 99.9% of the time, decided that it was spammy enough to warrant a disavow. This took a few hours because, unfortunately, LinkResearchTools does not allow you to export the disavow file with their trial version.

SEMRush and Ahrefs both allow you to create and export a disavow file, though.

SEMRush actually has a very good link audit tool that looks at similarities between bad links including identical pages, titles, file paths, Google Analytics ID, Google Ads ID, etc.

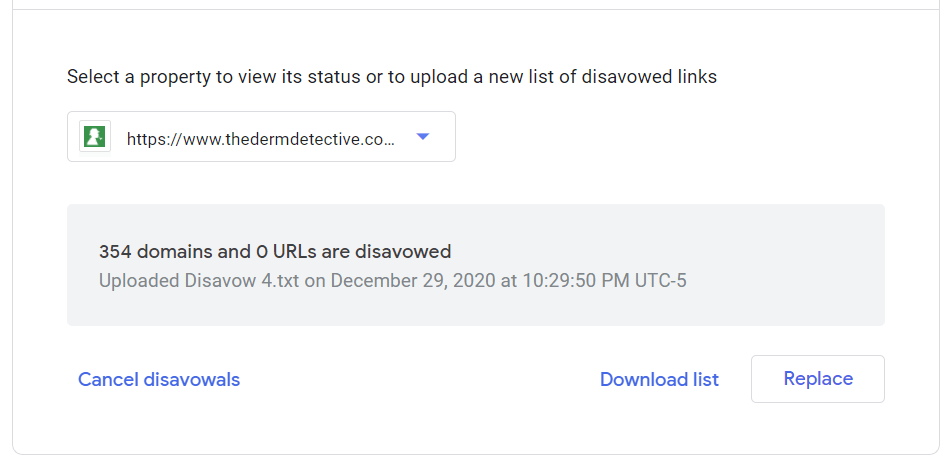

In total, I disavowed 354 domains and submitted it to Google Search Console.

I know, it sounds like a LOT, but there was just so much SPAM there.

The other reason is that my website has been up now for 2 years and I've never done a disavow before so these types of links have been accumulating in the background.

I've actually noticed many of them in the past on my backlink profile in Ahrefs but never thought to disavow them (due to the risk of harming yourself with a disavow).

Now, a disavow file can take a few weeks for Google to process and then recalibrate so if there's going to be any impact, I should see those come in around February.

If I don't see a recovery by then, I'd also consider disavowing my paid guest posts as some of them (not all) were flagged by LRT as suspicious with high DTOX scores.

At this point, I think it's worth discussing the possibility of a negative SEO attack.

A negative SEO attack is when (usually) a competitor sends a bunch of spammy backlinks and over-stuffed anchor texts to your page, in hopes of getting Google to lower your rankings and effectively increase their own rankings.

Now, Google says that you shouldn't worry about negative SEO because they "take care of it" by devaluing the spammy links so they don't pass any negative effects to your website - however, some SEO pundits are not convinced this is true.

Personally, I don't entirely believe that I was targeted by negative SEO, frankly, because my website is very small so it doesn't seem logical for someone to target me as they'd have to spend resources (money & time) to send bad links my way in the first place.

However, most of the spam links were directed at a handful of pages, rather than being evenly spread out. For some reason, certain pages were targeted by the scrapping software - it could be a coincidence (due to their keyword selection process for creating the auto-generated content) or it might suggest an intentional attack. 🤔

Whew, this is a pretty long blog post already. Thanks for reading through it!

At this point, I'm cautiously optimistic that the changes I've made, especially the link audit and disavow process, will restore at least some of my original traffic.

Part of the reason I remain hopeful is that my rankings did not completely tank following the algorithm update. In most cases, I lost traffic because I went from #1-3 for a high volume keyword to #9-10 or back to page 2.

That tells me that Google still thinks my page is very relevant, but just doesn't have enough of the other factors (domain authority, etc.) to rank at the top of the SERP.

In 2021, I plan to continue building high-quality content (especially using Lasso displays) and execute a more comprehensive link building strategy to build domain authority.

My goal next year is to build $2,500-$3,000/mo of income, of which $2,000 will come from my current website, and $500-$1,000 from my second website (which I'm currently researching).

Even though I've had a disappointing end to 2020, I take comfort in the fact that I was able to achieve my target of $1,000/mo (a level that I feel is significant enough to demonstrate the potential earnings of this business) in 3 months out of 12.

Despite two major setbacks (Amazon & Google), and a worldwide pandemic, I genuinely believe that I've been building my business back better after each obstacle:

I also believe the experience that I've accumulated in this process has been both rewarding and will be extremely useful for the future.

If I launch an ecommerce store or join a tech startup later on, these foundational SEO skills and general understanding of websites and the Internet will be very helpful.

To a prosperous, healthy, and successful 2021! 🎆

Tom